How Kandou is Accelerating the Adoption of AI at Scale

Cloud infrastructure is currently the single biggest tech investment category, and with this investment comes the expectation that AI will be able to process those yottabytes of data and begin providing the insights and innovations that will define life in the 21st century. In reality, processing and exploiting all that…

Cloud infrastructure is currently the single biggest tech investment category, and with this investment comes the expectation that AI will be able to process those yottabytes of data and begin providing the insights and innovations that will define life in the 21st century.

In reality, processing and exploiting all that data is easier said than done. While the tech is emerging, if we want to do it at scale we need connectivity that is truly fit for purpose.

Here we look at how the needs of data centers and hyperscalers are evolving, and how Kandou’s purpose-built semiconductor solutions can help unlock the next phase of deep learning and artificial intelligence discoveries.

It’s all happening in the cloud

Dependence on the cloud is growing at lightning speed. With over $2t investment forecast in the next decade, more than 90% of organizations use the cloud – two thirds of them public cloud. Reports demonstrate that cloud usage is booming, especially in the US and Western Europe, citing its ability to boost gross margins and profitability, generate more revenue, and accelerate time to market. It’s not just businesses. In total, global data creation is expected to hit 180 zettabytes by 2025. To put that into context, you would need one billion 1TB hard drives to store a single zettabyte of data.

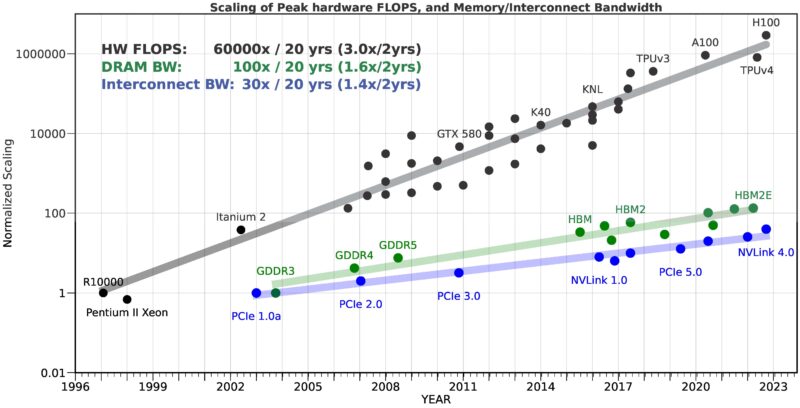

Implicitly – and increasingly explicitly – AI sits at the heart of this shift and the new era it will enable. While peak compute has increased by 60,000x in the past two decades, DRAM has only increased by 100x and interconnect by 30x. That’s a big disparity. AI is the key ingredient that will take all that cloud storage and make it flexible and viable, opening up new pathways. Modern cloud infrastructure relies heavily on elastic resource allocation at high-speed. The speed at which you can transfer data flexibly across fluid resource pools such as heterogenous computing, elastic storage and software defined networks drives your performance and sets the parameters for success. Which is where things get sticky.

The problem with moving data in the cloud

The greater the quantities of data, the harder it is to wrangle. Connectivity is proving to be the stumbling block among data centers and AI innovators alike. Bottlenecks are slowing processing down, and placing a glass ceiling on the potential of this cloud-first era.

Computation is suffering from slow die-to-die connectivity, with CPU’s idle for up to 40% of the time as they wait for the data they need. Storage is limited in terms of high-speed data movement with two to four times the data throughput. And networks are complaining of constrained bandwidth, the demand for which doubles every two years. There’s a traffic jam, and it’s just one of the reasons data centers are not keeping up with the demands of AI. So where are the jams being created and what can we do to free them?

Connectivity and memory pooling are not the only problem, but they are typically not fit for purpose across data centers worldwide. Every one of the connection points sending data on its way needs to move faster to deliver what AI investment is asking for. And Kandou have the semiconductor innovation to help do it.

Maintaining the flow required to grow

What veins and the circulatory system do for the human body, Kandou does for data intensive cloud infrastructure. Our focus sits squarely on solving signal integrity issues for innovators trying to create those ultra high-speed environments that facilitate deep learning and AI.

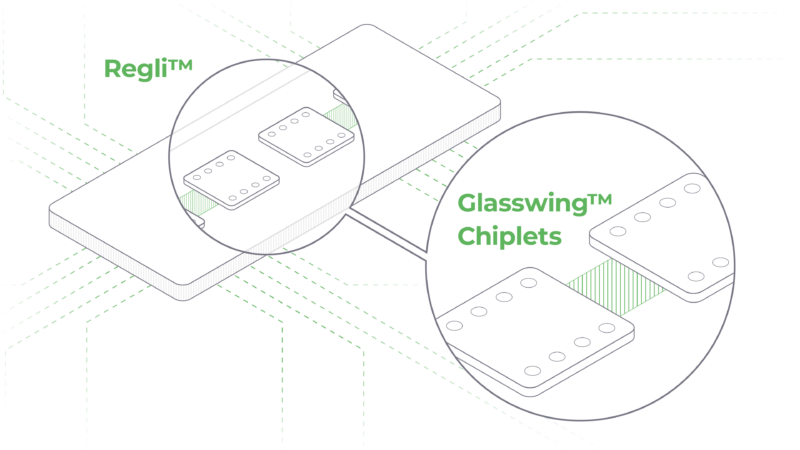

Kandou specialize in retimers that keep data flowing more swiftly, reliably and precisely thanks to advanced mathematical designs and rigorous production and testing methods. Our comprehensive portfolio of connectivity solutions includes Regli™ – a purpose-built PCIe solution that offers the plug-and-play simplicity that data centers and hyperscalers need to raise their game.

The speed – and everything else – AI needs

Time is of the essence. At sub-10 nanoseconds latency, Kandou’s Regli is one of the fastest PCIe retimers in the world, and with a bit error rate of just 1E-12 it is also one of the most reliable. But speed and consistency are of limited use without a robust package that addresses all the pain points data specialists face.

- Protocol awareness

The first is protocol awareness. The industry has widely acknowledged that CXL is the gamechanger data centers have been anticipating the deployment of CXL2.0 solutions enabling memory pooling, cost-effective memory allocation, and the security features necessary for CSP deployment and the future composability possibilities of CXL3.1.

Because CXL will be used in combination with PCIe, any effective retimer needs to recognize the CXL protocol, which is changing rapidly. Having programmability and flexibility that covers all the use cases is essential, and exactly what Regli’s flexible architecture is built for.

- Debugging

Another key feature of a high-speed retimer is diagnostics, so data centers can adapt and install without experimentation and risk. Teams simply don’t have time to perform high-speed lab testing and scrutinize every aspect of a given PCIe link.

Regli’s on-chip diagnostic debugging means that teams can quickly isolate and identify any problems without the need for isolated testing.

- Security

No retimer solution is viable if it isn’t secure. Regli has secure boot capability as part of its base architecture, so all attack surfaces are covered for complete peace of mind.

- Power

A retimer can do all these things and still not be viable, if its energy demands are high. In an era where sustainability is a priority, data centers can’t afford to waste heat, energy, or money. Regli brings all these advantages with reduced power consumption to boot.

There’s one final reason Regli is so well suited to enabling AI at scale: chiplets.

We build product families quickly

The era of the monolithic chip is over, and the era of chiplets has begun. Working with a highly versatile chiplet approach means that testing is far simpler and products are ready for market a lot quicker, and designers have a lot more flexibility in configurations.

Kandou is founded on a key technological breakthrough – CNRZ-5 Chord™ Signaling – which simultaneously sends five bits of data over six correlated wires. The result is double the bandwidth at half the power: 1Tbps at under one watt. Because Kandou’s CXL 2.0 ready PCIe Gen5 retimers offer such a low bit error rate, high speeds, and versatile configurations, they are the ideal bespoke interconnect technology for the next generation of data centers and AI discoveries.

Industry is ready to look past the initial phase of cloud exploration, and begin seeing what machine learning and artificial intelligence can really do. With ground-breaking semiconductor products like Regli, Kandou is helping those cloud investors go beyond, accelerating AI adoption at scale with the tailored connectivity solutions required to unlock the future.